How To Setup Apache Spark On Hadoop Cluster

Spark is a framework that uses RDD to process massive volumes of data by referencing external distributed databases. Machine learning applications, data analytics, and graph-parallel processing all employ Spark in distributed computing.

We discussed Spark in detail in the previous blog, now this tutorial will walk you through installing and testing Apache Spark on Ubuntu 20.04.

Step 1: Download and install the Apache Spark binaries

Spark binaries are available from https://spark.apache.org/downloads.html. Download the latest version of the spark from the website given above.

Log on node-master as the Hadoop user and run the following command:

$ wget https://dlcdn.apache.org/spark/spark-3.2.1/spark-3.2.1-bin-hadoop3.2.tgz

$ tar -xvf spark-3.2.1-bin-hadoop3.2.tgz

$ mv spark-3.2.1-bin-hadoop3.2 sparkStep 2: Spark Master Configuration

2.1 Integrate Spark with YARN

Edit the bashrc file /home/hdoop/.bashrc and add the following lines:

export SPARK_HOME=/home/hdoop/spark

export PATH=/home/hdoop/spark/bin:$PATH

export LD_LIBRARY_PATH=/home/hdoop/hadoop/lib/native:$LD_LIBRARY_PATH

$ source ~/.bashrc

Restart your session by logging out and logging in again.

Now, we have to rename the spark default template config file:

$ cp $SPARK_HOME/conf/spark-defaults.conf.template $SPARK_HOME/conf/spark-defaults.confEdit $SPARK_HOME/conf/spark-defaults.conf and set spark.master to yarn:

spark.master yarn

Spark is now ready to interact with your YARN cluster.

2.2 Edit spark-env.sh

Move to the spark conf folder and create a copy of the template of spark-env.sh and rename it.

$ cd /home/hdoop/spark/conf

$ cp spark-env.sh.template spark-env.sh

$ sudo nano spark-env.sh

And set the following parameters. This is for the Multi-Node Hadoop cluster

2.3 Add Workers

Edit the configuration file slaves in (/home/hdoop/spark/conf).

$ sudo nano workers

If you have Multi-nodes then add the following entries:

master

slave01

slave02

Otherwise, for single node, you just have to add:

localhost

2.4 Start Spark Cluster

To start the spark cluster, run the following command on master.

$ ./sbin/start-all.sh

Step 3: Check whether services have been started

To check daemons on master and slaves, use the following command.

$ jps

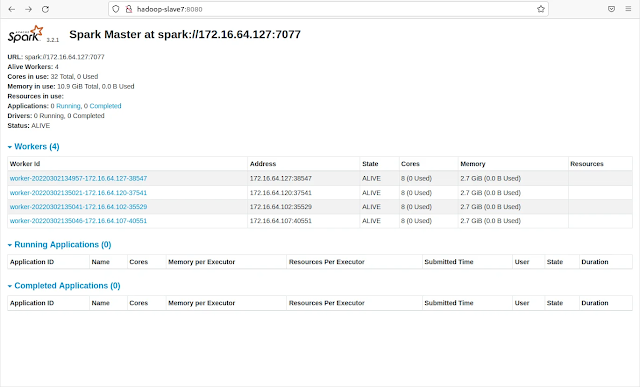

Step 4: Spark Web UI

Browse the Spark UI to know about worker nodes, running applications, and cluster resources.

http://localhost:8080/

Step 5: Start spark-sql

To run queries on the spark cluster, run the following command on any node.

$ cd /home/hdoop/spark

$ ./bin/spark-sql

Stop Spark Cluster

To stop the spark cluster, run the following command on master.

$ ./sbin/stop-all.sh

No comments:

Post a Comment

Thank you for submitting your comment! We appreciate your feedback and will review it as soon as possible. Please note that all comments are moderated and may take some time to appear on the site. We ask that you please keep your comments respectful and refrain from using offensive language or making personal attacks. Thank you for contributing to the conversation!