Introduction to Big data with Apache Spark

Limitations of Apache Hive

Apache Hive is a data warehouse that stores data much like RDBMS. Hive works only with structured data using SQL queries. Hive does not work properly on the OLTP and OLAP operations. The default engine that hive uses is MapReduce and MapReduce lags in the performance of the data size increases from 10 GB onwards. At the time of failure, data cannot be recovered because the hive does not have the capability of resuming the data. The above limitations in hive forced the computer engineers to think of something better than hive and then SPARK was born.

Limitation of MapReduce

The major aspect that leads to the birth of the Apache Spark framework is the speed at which a computation engine is processing the data and giving the results. Map Reduce no doubt is a wonderful platform for big data analytics but the problem with MapReduce is that the sharing of data between the clusters is slow because each time the results are stored in the hard disk and fetching of any data from the hard disk is a slow process. The reason why this is happening is replication, serialization, and Disk I/O.

What is Apache Spark?

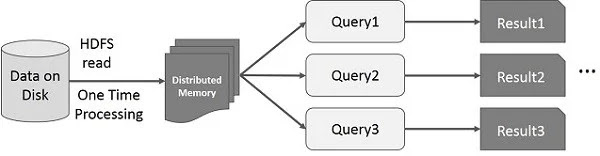

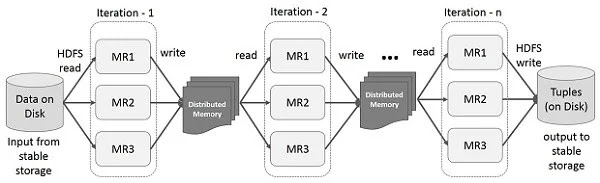

So, in order to solve this problem, researchers developed the platform Apache Spark. Because of the main data structure of Spark, i.e, RDD, which provides a fault-tolerant scheme as well the in-memory computation, this problem is solved. In Apache Spark, either an interactive operation is performed or an iterative operation is being performed, every time Spark stores the intermediate results in the RAM so that if the result has to be used in the near future so it can be easily fetched and this will increase the processing speed. The result is stored in the secondary storage only if the RAM is full. Even if any RDD is to be used very frequently so that is also kept in the RAM. If the same dataset is used for computing the result of different queries, so Spark keeps that dataset in the RAM, thus, making the execution time better. This is also known as an interactive operation on RDD. If an intermediate result is to be shared among the cluster so, it is kept in the RAM. This is known as an iterative operation on Spark RDD.

|

| Interactive operation |

Spark is a framework that processes large amounts of data through RDD by accessing the external distributed datasets. Spark provides the in-memory cluster computation as it keeps data on RAM rather than any external storage (hard disk). This increases the processing time and allows parallel processing. Spark allows the users to work in multiple languages like java, scala, R, and python. Spark also provides the facility of pipelining. Spark uses complex SQLs for data analytics.

Spark Features:

1. Fast Analytics:

Since the data is fetched from the memory in the form of chunks and in parallel, so Spark performs the in-memory computation providing faster processing of the data.

2. Spark streaming:

Spark has the capability to live-stream a large amount of data and Spark does this with the help of Spark streaming.

3. Supports various APIs:

Different programming languages are supported by Spark like python, java, scala, and R.

4. Data processing:

Spark provides the facility for applications of extracting the data from a large number of datasets.

5. Integrated with tools:

Spark can be integrated with many of the big data analytics tools such as Hive, and Iceberg. Spark uses various external sources for the data analysis like hive tables, external storage devices, and structured data files. Spark can work not only with structured data but also with unstructured data, providing the users with lots of flexibility and easiness.

Components of Spark:

a. Apache Spark core:

Apache Spark Core is the execution engine of Spark. This provides the in-memory computation feature to Spark.

b. Spark SQL:

It is a module used to work with structured data and allows users to write SQL queries.

c. Spark streaming:

Spark streaming allows working with the live streaming data.

d. ML lib

Spark allows the users to work with the machine learning algorithms.

e. GraphX

A library that helps the users to work with graphs.

f. ETL (Extract, Transform, Load)

Extraction means extracting the data from the source. In Spark, this source can be Hive table, HBase, JASON file, Parquet, any external source, etc.

Transform is the transformation of ensuring whether the extracted data is clean or not. Empty spaces in the dataset must be filled up by the null values. Duplicate data must be removed.

Loading means loading the data to the destination. The destination can be a database or a data warehouse or even any text file or flat file where the data has to be copied.

IMPORTANT CONCEPTS OF APACHE SPARK

1. Dataframes:

Dataframe is a data structure similar to the spreadsheet but data frames represent data in a 2-dimensional table, i.e, rows, and named columns. Dataframes are immutable objects. Data frames contain the schema of the table, defines the datatype of each column, and where ever the value is missing in any of the row or columns, fill those missing values with the null values. With the help of data frames, data can be distributed across the clusters, and because of this if any of the nodes fail, still, the data can be accessed through other nodes in the clusters. This characteristic helps to perform more computations in parallel hence saving time. Following are the sources from which the data frames can be constructed:

- Structured data files

- Tables stored in Hive

- Exiting RDDs (resilient distributed datasets)

2. Resilient Distributed Dataset (RDD):

Resilient distributed datasets. An immutable object, i.e, no changes can be to the dataset. As the name suggests that this dataset is fault-tolerant, meaning that if any of the nodes fail in the cluster so, the RDD is capable of recovering itself automatically. Even at the time of failure, multiple transformations were performed on the RDD still those transformations will be recovered. RDD is the fundamental data structure of Apache Spark. RDD helps the user to store the data by partitioning the data across multiple nodes. Thus, allowing parallel processing, increasing the query processing performance, and increasing data manageability.

3. Fault-tolerant:

Basically, the fault-tolerant property of Spark RDD is for the live streaming data, so that even at the time of system failure, the data can be recovered. Data can be recovered in the following ways:

Any of the data received is replicated to all the nodes. This means that all the nodes will be having a copy of the received data. So, the data can be retrieved back from any of the nodes at the time of failure.

If the data has not been yet copied to the other nodes and a failure occurs, so now the data can be only recovered from the source.

Apache MESOS, the open-source software, resides between the application layer and the operating system and creates a backup master by making the Spark master fault-tolerant.

At the time of failure, the data can easily be recovered with the help of DAG. DAG keeps all the information on the RDDs. It keeps track of what operations are performed on the RDDs, and what tasks have been executed on the various partitions of the RDDs. So at the time of failure, with the help of the cluster manager, we can check which RDD crashed or which RDD’s partition crashed. Then with the help of DAG, we can recover the data loss of that RDD at the same time.

4. DAG (Directed Acyclic Graph)

DAG optimizer optimizes the queries, i.e, let says we have to perform two operations together like map() and filter() operation. If we have defined the map() function first and the filter() function after the map() so the DAG optimizer will first perform the filter() function as this will filter out the irrelevant data and then the map() function will be performed, thus reducing the time and space complexity. Following are some advantages given by:

- Many queries can be executed at the same time with the help of DAG.

- Fault tolerance is achievable because of DAG.

- Better optimization.

5. Datasets:

Spark datasets are the extension of data frames and combine the features of both Dataframes and RDDs. In the dataset, there is no need to find the schema of the data. Datasets themselves find the schema just like the RDDs by using the SQL engine. Datasets use the catalyst optimizer just like the data frames in order to do the optimizations. Spark dataset provides a type-safe interface, i.e, the data types of all the columns in the dataset are checked by the compiler at the compilation time. Syntax and semantic error in the dataset is checked at the compilation time.

6. Transformation in Spark:

Dataframes and RDDs in Spark are immutable objects, so once they are created no new modifications can be done to them. It may happen sometimes that the data which is stored may need some modifications as per the requirement so, the question arises HOW TO CHANGE THE DATAFRAMES AND RDDs AS PER REQUIREMENT? Because if we cannot change data as per requirement, so the present data is of no use.

TRANSFORMATION is a way in Spark through which we can have modifications in the data frames and RDDs as per requirement. The instructions that are given to Spark in order to modify the currently exiting data frame or RDDs as per our requirement is called transformation. In Spark, transformation always creates a new RDD from the exiting RDD without making any sort of update in the exiting RDD.

Two types of transformation are:

1. Narrow Dependencies: from one partition of data only a single output partition will be created. There will be no movement of data among the partitions in order to create a new partition. We may say that it is a one-to-one mapping.

map(), filter(), union(), mapPartition(), flatmap() are some of the functions of the narrow transformation.

Spark automatically performs pipeline operation on narrow transformation due to which several multiple transformations are performed in-memory.

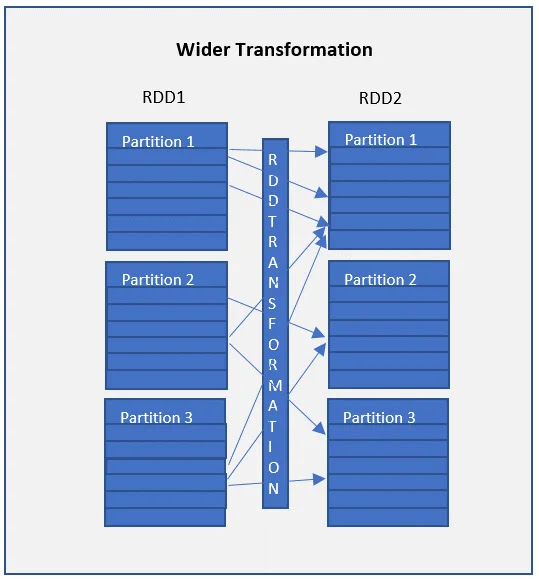

2. Wide Dependencies: The output of wide transformation is from the data that resides on different partitions so there is data shuffling between partitions across the cluster and because of this data shuffling, wide transformation is also termed shuffle transformation.

groupBykey(), aggregateByKey(), aggregate(), join(), repartition() are some of the functions of wide transformation.

7. RDD lineage graph:

When a new RDD is created by the exiting RDD, so new RDD contains a pointer pointing to the parent RDD and the parent RDD is the RDD from which the new RDD is created. This pointer pointing to the parent shows the dependencies between the RDDs and this dependency is stored in a form of a graph called lineage graph.

8. Lazy evaluation:

Spark does not straight away give the result of the transformation operation on the RDD instead it waits for some action to be performed on the RDD in order to give the result. When there is a need to work on the actual dataset so at that point in time actions are used. The result of these actions is stored either in external storage or to the driver. Basically, actions send data from the executer (one that executes the task) to the driver (a process that communicates between the worker and the task that is executed). So, this means that the RDD is not loaded until it is needed. The transformation is thus, added to the DAG, pointing towards the parent RDD and this results in a lazy evaluation of transformation.

Following are the advantages of lazy evaluation:

- Programs can be organized into smaller operations. The code is loaded by the driver program to the cluster, so if there will be smaller operations, the similar smaller operations can be grouped together, reducing the number of passes on data.

- Each time, the values are computed as per requirement, so the switching between the driver and cluster reduces, hence, increasing the processing speed.

- Queries are optimized because of the lazy evaluation

- Since only those values are computed that are needed so this saves time.

Relational Database

A database (tables with columns and rows) in which the relationship between the data is already defined and the columns are named columns is called a relational database. Each row can be uniquely identified by a unique identifier called the primary key. One row of a particular table can be linked with the other table rows with the help of a foreign key. No need to reorganize the database tables in order to access multiple data from multiple databases.

Important aspects of relational database:

a. SQL

With the help of SQL, data can be added in a particular row, an update of any data can be done in any of the rows, any irrelevant row can be deleted from the table, etc.

b. Data integrity

Data integrity means the correctness and consistency of the data.

c. Transaction

ALL-OR-NOTHING, i.e, Either the whole of the transaction must be executed altogether at one time or none of the parts of the transaction should be executed.

d. ACID Compliance

- Atomic: If the transaction completes successfully as a whole or part of the transaction fails, the entire transaction becomes invalid.

- Consistent: Ensures that the data integrity constraints must be followed

- Isolated: Each transaction must be executed in an independent manner, i.e, every transaction must independent of each other.

- Durable: Changes that are made to the database must be permanent and do change even on the occurrence of system failure.

Data structure:

Data structure refers to the format for arranging, constructing, configuring, and structuring data. Different structure types serve different purposes, offering data organization and retrieving in addition to storage. The data structure includes information about the data, as well as the applicable relationship between the data and functions. Data structures are essential for managing large amounts of data efficiently and can be used to translate abstract data types into applications. For example, displaying a relational database as a binary tree. Data structures are often classified by their characteristics. For instance, linear or nonlinear describes chronological and unordered data, respectively. Different types of data structures are Arrays, Lists, Stacks, Queues, Graphs, Linked lists, and Trees.

No comments:

Post a Comment

Thank you for submitting your comment! We appreciate your feedback and will review it as soon as possible. Please note that all comments are moderated and may take some time to appear on the site. We ask that you please keep your comments respectful and refrain from using offensive language or making personal attacks. Thank you for contributing to the conversation!