Computer Systems Performance Modeling And Evaluation - Part 4

Introduction

Now, after discussing workload characterization, we move on to understanding performance measures, their categories, and characteristics.

Performance Measures

The interesting performance measures of a computer system depend on the domain of application. e.g., the requirements for an interactive system are different from batch systems.

Broadly speaking, all performance measures deal with three basic issues:-

- how quickly a given task can be accomplished,

- how well the system can deal with failures and other unusual situations, and

- how effectively the system uses the available resources.

Categories of Performance Measures

1. Responsiveness:

Evaluate how quickly a given task can be accomplished by the system. e.g. processing time, response time, turnaround time, queue length, etc. These measures are all random and typically discussed in terms of average values as well as variances.

2. Usage Level:

Evaluate how well the various components of the system are being used. Possible measures are throughput and utilization of various resources. Utilization is a measure of the fraction of time that a particular resource is busy. e.g. CPU utilization.

3. Dependability:

Indicate how reliable the system is over the long run. Possible measures are reliability, MTTF (mean time to failure), MTTR (mean time to repair), etc. MTBF (mean time between failures) = MTTF +MTTR

4. Productivity:

These measures indicate how effectively a user can get his or her work accomplished. Possible measures are user-friendliness, maintainability, and understandability.

Characteristics of a good performance metric

It is useful to understand the characteristics of a good performance metric. Sometimes using a particular metric can lead to erroneous or misleading conclusions.

1. Linearity:

If the value of the metric changes by a certain ratio, the actual performance of the machine should also change by the same ratio. For example, suppose upgrading system A to a new system B whose speed metric (i.e. execution-rate metric) is thrice as large as the same metric on A. It is expected that the new system B will be able to run the application programs in one-third of the time taken by the old system A.

Not all types of metrics satisfy this proportionality requirement. Logarithmic metrics, such as the dB scale used to describe the intensity of sound, for example, are nonlinear metrics in which an increase of one in the value of the metric corresponds to a factor of ten increase in the magnitude of the observed phenomenon.

2. Reliability:

A performance metric is considered to be reliable if system A always outperforms system B when the corresponding values of the metric for both systems indicate that system A should outperform system B.

Several commonly used performance metrics do not in fact satisfy this requirement. The MIPS metric is notoriously unreliable. It is not unusual for one processor to have a higher MIPS rating than another processor while the second processor actually executes a specific program in less time than does the first processor. Such a metric is essentially useless for summarizing performance, and we say that it is unreliable.

3. Repeatability:

A performance metric is repeatable if the same value of the metric is measured each time the same experiment is performed. Note that this also implies that a good metric is deterministic.

4. Easiness of measurement:

The more difficult a metric is to measure directly, or to derive from other measured values, the more likely it is that the metric will be determined incorrectly. The only thing worse than a bad metric is a metric whose value is measured incorrectly.

5. Consistency:

A consistent performance metric is one for which the units of the metric and its precise definition are the same across different systems and different configurations of the same system. While the necessity for this characteristic would also seem obvious, it is not satisfied by many popular metrics, such as MIPS and MFLOPS.

6. Independence:

Many purchasers of computer systems compare the performance metric values of systems. There is a great deal of pressure on manufacturers to design their machines to optimize the value obtained for a particular metric and to influence the composition of the metric to their benefit. A good metric should be independent of outside influences to prevent corruption of its meaning.

Processor and system performance metrics

1. The clock rate

The frequency of the processor's central clock. The implication to the buyer is that a 250 MHz system must always be faster at solving the user's problem than a 200 MHz system, for instance. However, this performance metric completely ignores how much computation is actually accomplished in each clock cycle, the complex interactions of the processor with the memory subsystem and the input/output subsystem, and the processor may not be the performance bottleneck.

It is very repeatable (characteristic 3) since it is a constant for a given system, It is easy to measure (characteristic 4) since it is most likely stamped on the box, the value of MHz is precisely defined across all systems so that it is consistent (characteristic 5). It is independent of any sort of manufacturer's games (characteristic 6).

However, the unavoidable shortcomings of using this value as a performance metric are that it is nonlinear (characteristic 1) and unreliable (characteristic 2). As many owners of personal computer systems can attest, buying a system with a faster clock in no way assures that their programs will run correspondingly faster. Thus, we conclude that the processor's clock rate is not a good metric of performance.

2. MIPS

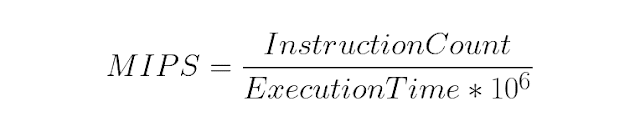

The MIPS metric is an attempt to develop a rate metric for computer systems that allows a direct comparison of their speeds. Thus, MIPS, which is an acronym for millions of instructions executed per second, is defined by

where te is the time required to execute n total instructions. MIPS is easy to measure (characteristic 4), repeatable (characteristic 3), and independent (characteristic 6).

Unfortunately, it does not satisfy any of the other characteristics of a good performance metric. It is not linear since, like the clock rate, a doubling of the MIPS rate does not necessarily cause a doubling of the resulting performance. It also is neither reliable nor consistent since it really does not correlate well to performance at all.

The problem with MIPS as a performance metric is that different processors can do substantially different amounts of computation with a single instruction. For instance, one processor may have a branch instruction that branches after checking the state of a specified condition code bit. Another processor, on the other hand, may have branch instruction that first decrements a specified count register, and then branches after comparing the resulting value in the register with zero.

In the first case, a single instruction does one simple operation, whereas in the second case, one instruction actually performs several operations. These differences in the amount of computation performed by an instruction are at the heart of the differences between RISC and CISC processors and render MIPS essentially useless as a performance metric.

3. MFLOPS

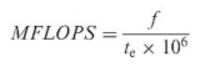

MFLOPS is an acronym for Millions of FLoating-point Operations executed Per Second. It tries to correct the primary shortcoming of the MIPS metric. Defines an arithmetic operation on two floating-point (i.e. fractional) quantities to be the basic unit of computation. The MFLOPS metric is a definite improvement over the MIPS metric since the results of a floating-point computation are more clearly comparable across computer systems than is the execution of a single instruction.

MFLOPS is thus calculated as

where f is the number of floating-point operations executed in te seconds. A more subtle problem with MFLOPS is agreeing on exactly how to count the number of floating-point operations in a program.

For instance, many of the Cray vector computer systems performed a floating-point division operation using successive approximations involving the reciprocal of the denominator and several multiplications. Similarly, some processors can calculate transcendental functions, such as sin, cos, and log, in a single instruction, while others require several multiplications, additions, and table look-ups. Should these operations be counted as single or multiple floating-point operations.

The first method would intuitively seem to make the most sense. The second method, however, would increase the value of f in the above calculation of the MFLOPS rating, thereby artificially inflating its value. This flexibility in counting the total number of floating-point operations causes MFLOPS to violate characteristic 6 of a good performance metric. It is also unreliable (characteristic 2) and inconsistent (characteristic 5).

4. SPEC

To standardize the definition of the performance of a computer system, several computer manufacturers banded together to form the System Performance Evaluation Cooperative (SPEC). This group identified a set of integer and floating-point benchmark programs that was intended to reflect the way most workstation-class computer systems were actually used. The standardized methodology for measuring the performance with these programs.

- Measure the time required to execute each program in the set on the system being tested.

- Divide the time measured for each program in the first step by the time required to execute each program on a standard basis machine to normalize the execution times.

- Average together all of these normalized values using the geometric mean to produce a single-number performance metric.

5. QUIPS

Quality improvements per second. It is developed in conjunction with the HINT benchmark program. A fundamentally different type of performance metric. It defines the quality of the solution as a user's final goal. The quality is rigorously defined on the basis of the mathematical characteristics of the problem being solved.

Dividing the measure of solution quality by the time taken to achieve that level of quality produces QUIPS. It has several of the characteristics of a good performance metric. The mathematically precise definition of quality for the defined problem makes this metric insensitive to outside influences (characteristic 6) and entirely self-consistent when it is ported to different machines (characteristic 5).

It is also easily repeatable (characteristic 3) and it is linear (characteristic 1) since, for the particular problem chosen for the HINT benchmark, the resulting measure of quality is linearly related to the time required to obtain the solution.

Drawbacks

1. It need not always be a reliable metric (characteristic 2) due to its narrow focus on floating-point and memory system performance.

2. It is generally a very good metric for numerical programs. However, it does not exercise other aspects when executing other types of application programs, such as the input/output subsystem, the instruction cache, and the operating system's ability to multiprogram, for instance.

You might like:

- Computer Systems Performance Modeling And Evaluation - Part 1

- Computer Systems Performance Modeling And Evaluation - Part 2

- Computer Systems Performance Modeling And Evaluation - Part 3

Thank you for reading!

This is PART 4 of the series, I have explained performance measures, their 4 categories, and 6 characteristics.

If you found this article useful, feel free to go for PART 5 of this awesome blog series.

Cheers!

No comments:

Post a Comment

Thank you for submitting your comment! We appreciate your feedback and will review it as soon as possible. Please note that all comments are moderated and may take some time to appear on the site. We ask that you please keep your comments respectful and refrain from using offensive language or making personal attacks. Thank you for contributing to the conversation!