How to Setup Hive LLAP on Hadoop Cluster

What is Hive LLAP?

In this blog, we will share our experiences running Hive LLAP as a YARN Service. First, check whether you have installed the following programs before getting started:

Apache Hive LLAP (Long Live And Process) is a long-running query processing program that runs on a multi-tenant Apache Hadoop YARN cluster. We'll talk about how we moved LLAP from Apache Slider to the YARN Service framework. LLAP is a series of processes that run in the background. LLAP extends Apache Hive by adding asynchronous spindle-aware IO, column chunk prefetching and caching, and multi-threaded JIT-friendly operator pipelines. It's critical for the Apache Hive/LLAP community to concentrate on the application's key features. This implies less time spent dealing with the application's deployment paradigm and less time spent learning about YARN internals for creation, security, upgrading, and other aspects of the application's lifecycle management. For this reason, Apache Slider was chosen to do the job. Since its first version, LLAP has been running on Apache Hadoop YARN 2.x using Slider.

This is how LLAP’s Apache Slider wrapper scripts and configuration files directory looked in a recursive view –

├── app_config.json

├── metainfo.xml

├── package

│ └── scripts

│ ├── argparse.py

│ ├── llap.py

│ ├── package.py

│ ├── params.py

│ └── templates.py

└── resources.jsonWith the introduction of first-class services support in Apache Hadoop 3, it was important to migrate LLAP seamlessly from Slider to the YARN Service framework – HIVE-18037 covers this work. Now with the YARN Service framework, this is how the recursive directory view looks – clean!

├── YarnfileStep 1: hive --service llap

$ hive --service llap --name llap0 --instances 2 --size 2g --loglevel INFO --cache 1g --executors 2 --iothreads 5 --args "-XX:+UseG1GC -XX:+ResizeTLAB -XX:+UseNUMA -XX:-ResizePLAB" --javaHome $JAVA_HOME

Step 2: Modify Hive-site.xml and yarn-site.xml

Step 3: Start LLAP Daemons

$ llap-yarn-$ddmmmyyyy/run.sh

Check LLAP Status

$ hive --service llapstatusWhy it was introduced?

The main problem which was faced on Hive was that every time a SQL job runs, it creates a new YARN application hence it adds up to the initial running time. Many improvements were introduced to fasten this process including Tez, a complex DAG which speeds up the execution time. Another problem was container-reusing, such that when a query is executed using a container, the upcoming queries will use the same container hence if you are looking at the output, you are consuming the container and wasting valuable time.

Benefits

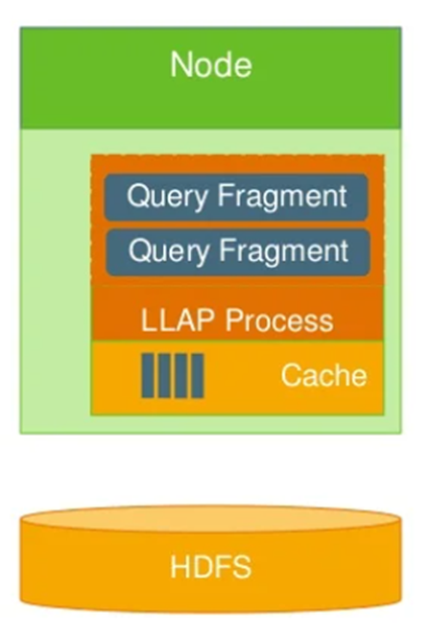

💠 Persistent Daemon

This daemon runs on slave nodes to decrease initial running time and ease the caching process and helps with just-in-time optimization. Since it runs as a Yarn process, so it’s stateless. LLAP nodes are able to communicate data with each other and they are resilient to failures.

💠 Execution Engine

LLAP works with the Hive execution engine enhancing its versatility and scalability. It has a configurable footprint that is, one can define the resources to be allocated to LLAP, enables for minimal latency for short queries while dynamically scaling for larger queries without wasting too many resources due to tight resource provisioning.

💠 Query Fragment Execution

LLAP is NOT a query engine instead it improvises the execution of Hive. LLAP usually executes partial queries and not the whole query. It allows parallel execution of multiple queries, since the daemon is running on slave nodes and dynamically allocating resources, it can prove useful for concurrency.

💠 I/O

The daemon asynchronously works with I/O as it works on allocating threads to processes as soon as the I/O threads get the data ready. It accepts a variety of file formats such as ORC, and Parquet.

💠 Caching

The metadata for input files, as well as the data, are cached by the daemon. Metadata is stored in the form of Java objects, and cached data is retained off-heap. Since it runs longer, the data is cached so query execution is faster than Hive.

💠 Workload Management

LLAP collaborates with Yarn in allocating resources such as providing containers to the running processes.

Short-Comings

Since it’s not an execution engine like MR, Tez or Spark neither it is a storage layer like HDFS, hence it's optional for us to use as it improves the performance of Hive. So we tried this tool to optimize our query execution and it proved really useful in drastically increasing the performance.

What’s next

This blog post provided details on how simple it is to run complex applications such as LLAP on the YARN Service framework and what are its advantages and disadvantages. In subsequent blog posts, we will start to deep dive into query optimization and many more. Stay tuned!

@TechAE

No comments:

Post a Comment

Thank you for submitting your comment! We appreciate your feedback and will review it as soon as possible. Please note that all comments are moderated and may take some time to appear on the site. We ask that you please keep your comments respectful and refrain from using offensive language or making personal attacks. Thank you for contributing to the conversation!