Concepts Of Distributed Computing In Hadoop

DISTRIBUTED COMPUTING SYSTEM

Distributed computing is a computing concept that, in its most general sense, refers to multiple computer systems working on a single problem. As long as the computers are networked, they can communicate with each other to solve the problem. If done properly, the computers perform like a single entity.

Table of Contents

- Distributed Storage

- Access & Location Transparency

- Resource Sharing

- Openness

- Concurrency

- Scalability

- Fault Tolerance

- Remote Procedure Call

Distributed Storage:

Hadoop uses the Hadoop Distributed File System (HDFS) as its primary data storage system. HDFS implements a distributed file system that provides high-performance access to data across highly scalable Hadoop clusters using a NameNode and DataNode architecture.

When HDFS receives data, it divides it into separate blocks and distributes them to different nodes in a cluster.

Access & Location Transparency:

Hadoop conceals differences in data representation and the way resources can be accessed by users, and also enables resources to be accessed without knowledge of their physical or network location.

Resource Sharing:

Hadoop YARN: This is a framework for job scheduling and cluster resource management.

Openness:

Hadoop is an open-source project, which means its source code is available free of cost for inspection, modification, and analyses that allows enterprises to modify the code as per their requirements.

Concurrency:

With parallel programming, we break up the processing workload into multiple parts, each of which can be executed concurrently on multiple processors.

How is concurrency achieved?

- Data can be split into chunks

- Each process is then assigned a chunk to work on

- If we have lots of processors, we can get the work done faster by splitting the data into lots of chunks

Scalability:

Hadoop cluster is scalable means we can add any number of nodes (horizontal scalable) or increase the hardware capacity of nodes (vertical scalable) to achieve high computation power. This provides horizontal as well as vertical scalability to the Hadoop framework.

Fault Tolerance:

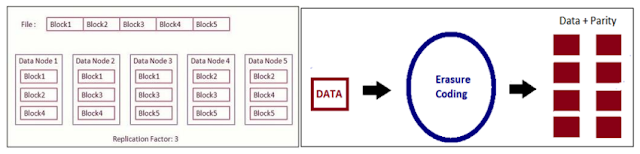

Fault tolerance is the most important feature of Hadoop. HDFS in Hadoop 2 uses a replication mechanism to provide fault tolerance.

It creates a replica of each block on the different machines depending on the replication factor (by default, it is 3). So, if any machine in a cluster goes down, data can be accessed from the other machines containing a replica of the same data. Hadoop 3 has replaced this replication mechanism with erasure coding. Erasure coding works by dividing files into small pieces and storing them on various disks. For each strip of the original data set, a certain number of parity cells are calculated and stored. If any machine fails, the block can be recovered from the parity unit. Erasure coding reduces storage by up to 50%.

Remote Procedure Call:

All data transmission and metadata operations in HDFS are through Remote Procedure Calls (RPC) and processed by NameNode and DataNode services within HDFS. When all the map workers have completed their work, the master notifies the reduce workers to start working. The first thing a reduce worker needs is to get the data that it needs to present to the user’s reduce function. The reduce worker contacts every map worker via remote procedure calls to transfer the <key, value> data that was targeted for its partition. This step is called shuffling.

- ClientProtocol is the interface that the client (FileSystem) communicates with Namenode.

- Datanodeprotocol is the interface between Datanode and Namenode communication

- Namenodeprotocol is the interface of Secondarynamenode and Namenode communication.

- Dfsclient is an object that calls the Namenode interface directly. User code calls Dfsclient objects through Distributedfilesystem to deal with Namenode.

More Resources:

- Easy Install Single-Node Hadoop on Ubuntu 20.04

- How To Install Multi-Node Hadoop On Ubuntu 20.04

- Components of Hadoop Ecosystem

- Run MapReduce Job On Hadoop

Let’s Put It All Together:

We started out by defining Distributed Computing, and explaining how Hadoop uses its characteristics. Feel free to ask questions if you need any help.

Special Credits to Umair Hasan for helping out with this blog.

Good Luck!

No comments:

Post a Comment

Thank you for submitting your comment! We appreciate your feedback and will review it as soon as possible. Please note that all comments are moderated and may take some time to appear on the site. We ask that you please keep your comments respectful and refrain from using offensive language or making personal attacks. Thank you for contributing to the conversation!