Best Practices For Apache Spark

In this blog, I have covered some of the best practices to follow while working with Apache Spark which ideally improves the performance of the application.

How to optimize Spark?

To enhance performance, we have to focus on achieving these:

- Reduce the Disk I/O & Network I/O

- Efficient CPU utilization by reducing any unnecessary computation

- Benefit from Spark's in-memory computation, including caching when appropriate

Spark Best Practices:

- Use DataFrame/Dataset over RDD

- File formats

- Parallelism

- Reduce shuffle

- Cache appropriately

- Tune cluster resources

- Optimize Joins

- Lazy loading behavior

- Avoid expensive operations

- Data skew

- UDFs

- Disable DEBUG & INFO Logging

1. Use DataFrame/Dataset over RDD

RDD also known as Resilient Distributed Dataset, the main concept behind Spark is used for low-level operations and has fewer optimization techniques.

DataFrame is the best choice in most cases because DataFrame uses the catalyst optimizer which creates a query plan resulting in better performance. DataFrame also generates low labor garbage collection overhead.

DataSets are highly type safe and use the encoder as part of their serialization. It also uses Tungsten for the serializer in binary format.

2. File formats

💠 Use Parquet or ORC (Columnar Format)

💠 Spark has vectorization support that reduces disk I/O

💠 Make use of compression

💠 Use splittable file formats

💠 Ensure that there are not too many small files.

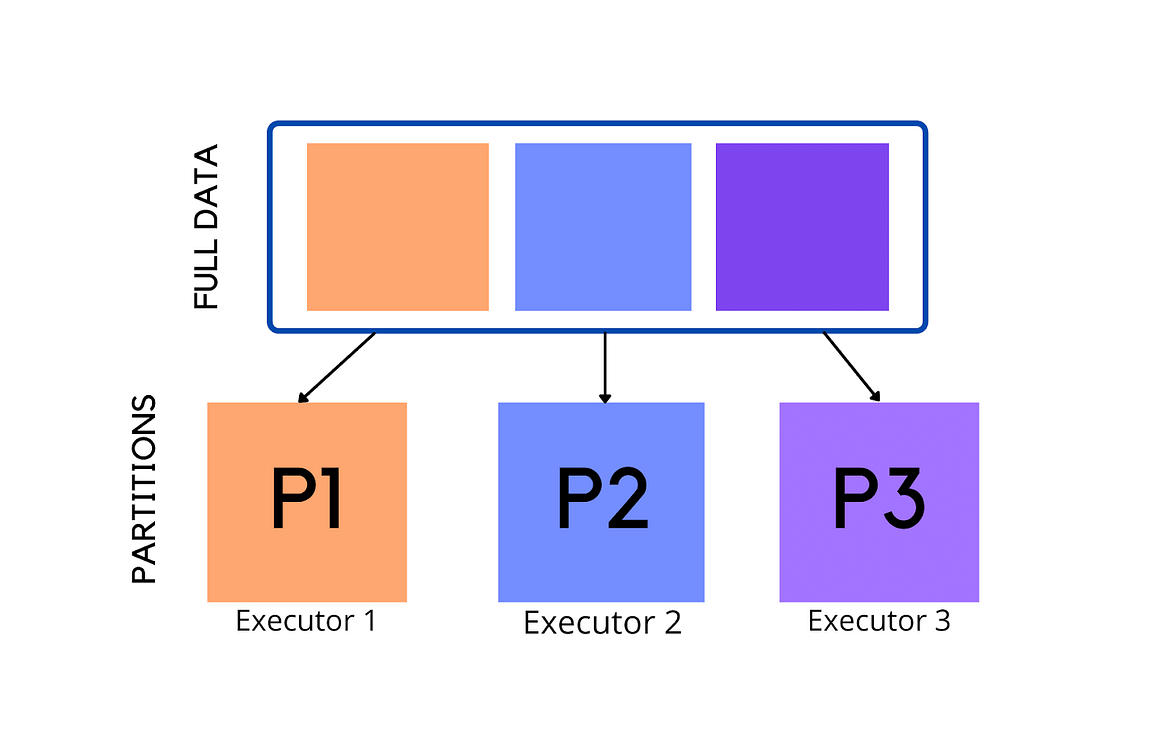

3. Parallelism

Increase the number of Spark partitions as needed to increase parallelism dependent on data size. Too few partitions may cause certain executors to stay idle, whereas too many partitions may cause task scheduling overhead.

Adjust the partitions and tasks as needed. Spark can handle tasks that take more than 100 milliseconds and recommends at least 2-3 jobs per core for an executor.

4. Reduce shuffle

Shuffle is an expensive operation since it includes moving data among cluster nodes, which requires network and disc I/O. Reduce the quantity of data that needs to be shuffled wherever possible.

Adjust the spark.sql.shuffle.partitions value for a task target size of 100MB to less than 200MB for a partition in large datasets.

spark.conf.set("spark.sql.shuffle.partitions",100)5. Cache appropriately

Use caching when the same operation is computed multiple times, and unpersist your cached dataset when you are done using them in order to release resources.

spark.conf.set("spark.sql.inMemoryColumnarStorage.compressed", true)

spark.conf.set("spark.sql.inMemoryColumnarStorage.batchSize",10000)6. Tune cluster resources

💠 Tune the resources on the cluster depending on the resource manager and version of Spark.

💠 Tune the available memory to the driver: spark.driver.memory.

💠 Tune the number of executors and the memory and core usage based on resources in the cluster: executor-memory, num-executors, and executor-cores.

7. Optimize Joins

💠 Joins are an expensive operation, so pay attention to the joins in your application to optimize them.

💠 Avoid cross-joins.

💠 Broadcast HashJoin is most performant, but may not be applicable if both relations in the join are large.

8. Lazy loading behavior

Apache Spark has two kinds of operations: transformations and actions.

For transformations, Spark provides lazy loading behavior. When writing transformations that return another dataset from an input dataset, you can write them in a legible manner. You don't have to worry about optimizing it or placing it all in one line because Spark will optimize the flow for you behind the scenes.

Spark actions are eager in the sense that they will initiate a calculation for the underlying action. Multiple count() calls in Spark apps that are added during debugging and do not get removed is a typical issue that I have noticed. It's a good idea to search for Spark actions and eliminate those that aren't required because we don't want to waste CPU cycles and other resources.

9. Avoid expensive operations

💠 Avoid order by if it is not needed.

💠 When you are writing your queries, instead of using select * to get all the columns, only retrieve the columns relevant to your query.

💠 Don’t call count unnecessarily.

10. Data skew

Ensure that the partitions are equal in size to avoid data skew and low CPU-utilization issues.

When you want to reduce the number of partitions prefer using coalesce() as it is an optimized or improved version of repartition().

Repartition: Gives an equal number of partitions with high shuffling

Coalesce: Generally reduces the number of partitions with less shuffling.

Note: Use repartition() when you wanted to increase the number of partitions.

11. UDFs

Spark has a number of built-in user-defined functions (UDFs) available. Check to see if you can use one of the built-in functions since they are good for performance.

12. Disable DEBUG & INFO Logging

Both methods result in I/O operations and hence cause performance issues when you run Spark jobs with greater workloads.

log4j.rootLogger=warn, stdout

More Resources:

See you next time,

@TechAE

No comments:

Post a Comment

Thank you for submitting your comment! We appreciate your feedback and will review it as soon as possible. Please note that all comments are moderated and may take some time to appear on the site. We ask that you please keep your comments respectful and refrain from using offensive language or making personal attacks. Thank you for contributing to the conversation!